What happens after you’ve done some user research for your service? Decisions made, do you move on and forget it? Or do you preserve that research for re-use and future team members?

I was discussing this challenge with Stephanie Rosenbaum. Stephanie is a distinguished user researcher and technical communicator; her business TecEd, Inc celebrated its 50th year in 2017.

We decided that the 2019 Service Design in Government conference (SDinGOV) was a perfect opportunity to get a group of people to think about what we do now after user research, and what we ought to do in future.

This note:

- describes our workshop and what we learned

- includes some links to find out more about ResearchOps

- has our slides at the end.

We would both like to thank the workshop attendees – a lively and thoughtful group of people with great ideas. I’d like to thank Stephanie for coming over to the UK from California especially for this workshop. This post reflects our joint work in preparing and running the workshop, and capturing the Post-Its. I’ve done further grouping and reflection later, and I hope that I have represented Stephanie’s views correctly.

We started the workshop with some reflections on ResearchOps

Good user research is crucial for service design, a point emphasised by the GOV.UK Service Standard.

Years ago, we worked hard to persuade clients and colleagues to do any user research at all, and often that was at best occasional. Our typical research activity was a big set piece with a formal report. Even so, we had a lot of tidying-up to do after our research activities.

These days, it’s far more typical to do frequent, iterative user research – a move that we are greatly in favour of. But in some ways this has intensified the problem of keeping up with the organisation of post-research activities such as ensuring that the insights are preserved in a way that they can be found by people who need them.

ResearchOps is the work that we do so that we can do good research

When Kate Towsey founded the ResearchOps community in 2018, she tapped into something many of us felt and wanted to discuss: how we can organise the work that we do so that we can do good research?

As she put it, drawing on a combined effort by many ResearchOps community members:

“ResearchOps is the mechanisms and strategies that set user research in motion.

It provides the roles, tools and processes needed to support researchers in delivering and scaling the impact of the craft across an organisation.”

Kate Towsey, talk at UX Brighton 2018

ResearchOps accounts for about 70% of a user researcher’s time

Leisa Reichelt’s 2014 post ‘What user researchers do when they’re not researching’ says that around 70% of user researchers’ time is spent on what we might now call ResearchOps: all the tasks that user researchers do before, during and after time spent with actual users. These include:

- working with the team to improve the service

- communicating what we know

- preparing for research

- looking at site analytics.

Stephanie and I suggested that as frequent, iterative user research is accepted as good practice, we now need to add to this list:

- reviewing previous research for relevant insights, and

- organising what we’ve done for reuse later.

ResearchOps includes everything on Leisa’s list, the additions that Stephanie and I suggested, and much more: recruiting the user researchers themselves being an obvious extra. But in this workshop, we decided to focus specifically on the things that we do after research – and particularly, on research debt.

Research debt is all the work that we leave to our future selves

It’s only possible to review previous research when the researcher has made time to organise the outputs from the research so that we can access them.

It is very tempting to postpone or neglect these after-research activities, especially in a rapidly-moving iterative research process. Our term for the work that we leave to our future selves is “Research debt”. Ironically, this post is itself a glaring example of my own research debt: this workshop was in March but events intervened and I didn’t find time to write it up until July.

At the SDinGOV conference, there was a presentation by Lucy Stewart and Mathew Trivet on Building a user research library for local government, and I’ve heard of similar projects in a variety of government departments. But a library is only useful when researchers make the effort to contribute to it.

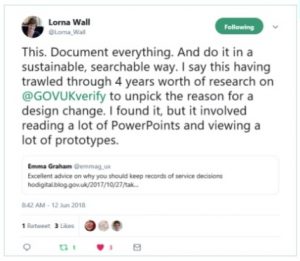

Research debt doesn’t only affect our own to-do lists. Lorna Wall’s heartfelt plea on Twitter to ‘document everything’ (see screenshot) perfectly illustrates the costs in lost time and value if previous research has not been documented in a way that makes it easier for colleagues in the future to review. (Lorna recommended Nadia Haq’s checklist, which we will return to at the end of this post).

Even when the original user researchers do find time to organise what they have done for later review, some kinds of research debt may appear later. Businesses, technology, and people move on. Recently the team I work with at NHS Digital knew that some research on a topic they were investigating had already been done – but there had been two changes of personnel and also a significant change to the technologies they were using. The records and reports from the research were deleted in the technology change as it was seen as a finished project. It took a lot of detective work to track down people who had been involved way back and who fortunately recalled sufficient detail for our purposes.

In better news: If we think about possible research debt when we create a deliverable, then some of the necessary work is quick and easy. I’m grateful to past me for including links or the source for each point in our presentation. It was only a tiny bit of effort at the time, and it’s made writing up this note a lot quicker.

We asked participants to identify outputs from research

Once we had introduced the ideas of ResearchOps and research debt, we asked people to note down anything that they could think of as an output from research. We suggested that they consider:

- Tangible: such as objects, things you pick up / set down / back up

- Intangible: such as decisions, opinions, empathy, ‘lightbulb moments’

- Immediate: answers to the research questions, explorations of hypotheses

- Longer-term: deeper understanding of users, better ideas about how to meet their needs.

To give a bit of space for introverts, and to get variety, we asked people to write their ideas down individually at first and then to compare and group them with others at their tables.

The classification here is mine, interpreting the groupings made by teams. You may classify topics in other ways, and I definitely found overlaps.

Research creates a lot of immediate, tangible outputs

Many people mentioned the things that we collect because we are doing research with people:

- consent forms

- demographic data

- recruitment lists

- Digital Inclusion Scale (a scale that captures a view of the participant’s level of comfort with digital technology)

and of course the basic data that we collect

- raw data

- photographs

- interview notes

- Pens of Power (paper pages marked up with the participant’s reactions to the content)

- questionnaire, vote, and poll answers

- recordings: video and audio, or media in general

- and most of all: Post-Its, the output mentioned most frequently.

People mentioned things that are needed before research and also help to put it into context later:

- Hypotheses and research questions

- Research or discussion guides, questions asked

- Screen shots of web pages before and after

- Prototypes and toolkits

- Meeting notes.

And outputs that are typically created at the same time as the research or immediately afterwards:

- Spreadsheets, perhaps with notes or transcripts

- Transcripts of interviews

- Heat maps

- Mark-up of recordings, audio clips, and video clips

- Quotes

- User research roundups in the Wiki

- End of the day emails.

Participants create many types of reports and maps

Many people working in user experience love a map, and service designers tend to be fond of them. As we expected, at a service design conference, participants mentioned creating a wide variety of maps from their research:

- Journey maps (the most popular); one person specifically mentioned the ‘as-is’ journey map and another “Journal maps [2 – 3 meters long, brown paper covered in Post-Its]”

- Actor’s map

- Empathy maps

- Pain points

- Service maps

- Speedboat poster with anchors [pain] and engines [good stuff]

- System and organisation map

- VPC-value proposition canvases.

We expected many people to mention reports of various types, and we heard many variations on that theme (as well as, simply, “Reports”):

- Actionable insights, conclusions, decisions, findings, observations, recommendations, statistics, and to-do lists: “Why we choose to focus on certain concepts to develop”

- User needs, content for user needs, narratives about service needs, poster with user needs, user stories, and user needs Trello board (a Trello board being one of the many tools for managing activities within a team or keeping track of insights)

- Slide decks, decks for particular groups such as teams or stakeholders, and PowerPoints (or, as one participant put it: “PowerPoints, PowerPoints, PowerPoints”)

- Debrief documents, design interventions, design patterns

- Final reports and final research summaries.

Participants thought of many ways that research influences people

Because we specifically asked for ‘intangible’ outputs, we expected a variety of ways that research influences ourselves, our teams, and colleagues or stakeholders. I think that there is an overlap here between the insights and recommendations that go into reports and to-do lists, so you will see some repeated items.

Participants mentioned:

- Findings, aha! and design insights, critical points/moments of truth, evidence, tacit knowledge, insights to users and needs, things which are important to people

- Agreement, best ways of working together in team, common understanding, breadth, better understanding of what people are trying to do

- Empathy

- Build tribe, grow team

- Understanding the user experience, wider understanding of “the problem”, context.

They mentioned outputs that related to influencing stakeholders and decision-makers – some perhaps within the team, some outside:

- Data for decision-making, insight that informs project or service decisions, opportunities for improvement, principles for design

- Appetite for more research among project sponsors

- Business case, spend money, sustain funding, justify spending

- Empathized stakeholders, recognition that other people need to be in the room, relationships, stakeholders’ buy-in.

And topics that look towards further iterations of research:

- Support organisational growth

- More hypotheses, further research questions, more questions, new directions, next step

- Insights—better understanding of user segments, how they can be created/defined/updated and tracked over time

- Ideas that need sharing or impact

- Institutional wisdom -> loss of context over time

- Where/what we need to design/develop

- Thematic insights/patterns

- Learnings for best practice “next time we will …” “next time we won’t …”.

The variety of outputs reflects the complexity of ResearchOps

We specifically asked people at the workshop to consider only the outputs from research.

We did not ask them to think about any of the other things that people who do research have to do in order to make research happen, and definitely not wider aspects of ResearchOps such as recruiting researchers and creating a career path for them.

Even so, we were impressed by the wonderful variety and range of the outputs, including many that we expected (consent forms, raw data) and some that we didn’t (the speedboat poster).

My favourite is the Post-It that reflected the way in which research, probably including this workshop, can change minds: “prove old people wrong”, with the word ‘old’ later annotated with the word “entrenched”.

We asked participants to think about what they do with those outputs

Once we had a variety of outputs, we asked people to consider whether or not we need policies for looking after these outputs. We suggested four categories:

- We have a clear policy for this and we do it immediately

- We have a policy and we get round to it occasionally

- We don’t have a policy but we wish we did

- We don’t have a policy and we don’t see any need for one.

This categorisation itself produced some reflection and discussion. For example, we heard questions and discussions about:

- What does ‘policy’ mean anyway? Does it include legal frameworks such as privacy laws? What about ‘things that we do as a team’ but that are not written down?

- Do we need to distinguish, or is it helpful to distinguish, between things that are mandated across our organisation and things within our area or team?

- What about an organisation that is changing? Are we looking at what we’ve done in the past, what we do right now, or what we might do in future after the changes?

- What about things that we do, and do consistently and immediately, but we don’t have any actual policy about them?

- What about things where our policy, or way that we do them, is ‘this is up to the researcher’s or team’s judgment at the time’? Is that a policy or not?

All of these are questions that we believe are well worth considering.

For the purposes of this write-up, and based somewhat on the way that people grouped things on their tables, I have used a slightly different classification:

- something we stick to (whether or not there is a policy for it)

- something that we do, but inconsistently

- we don’t have a way of doing this but wish we did

- we don’t need rules about this.

We also asked people to identify whether they consider any of the suggestions (their own, or from other people at the table) as a ‘best practice’.

Participants are careful about raw data and consent forms

As we expected, many people identified control of raw data, consent forms, and other sensitive items as things that they stick to.

Some people acknowledged that they could be more consistent. For example: “We have just developed policy for dealing with citizen-submitted sensitive info, but feel it is only halfway”.

We had a few mentions of a lack of any clear way of doing things around deleting data.

The two best practices mentioned in this area are:

- [A policy] for handling sensitive data (including having a schedule for deletion)

- Delete transcripts and keep anonymized data.

The picture for longer-term outputs was mixed

The collection of ideas around handling longer-term outputs, such as reports, personas, and other final deliverables, including maintenance of a research library was split approximately evenly between something we stick to, and we don’t have a way of doing this but wish we did.

Examples of something we stick to:

- Document deliverables (project management office makes us do it)

- Having a research library or repository.

But those also came up several times as things we would like to have.

And, in contrast, we also saw “reports” and “personas” as put into the area of “we don’t need a policy” – which, in hindsight, is ambiguous. Did people consider that they already handle those things well enough that no policy is necessary? Or do they consider that they don’t need reports or personas?

The three best practices mentioned in this area are:

- personas, user journeys, and needs [get turned into] user stories

- user needs across adjacent services

- keep a track and identify sensibilized stakeholders (we understand ‘sensibilized’ to mean ‘aware of user-centred design and keen to encourage it’, but we may be wrong).

At the team level, we learned that there is much variety in ways of working

Some people worked in teams that had clear ways working generally, and others mentioned individual topics such as (at a detailed level) coding of Post-Its, or (more broadly) having a Head of Practice.

Others mentioned that they felt the need for clarity about ways of doing some things. For example, at the general level “It’s a bit of a free-style approach” and “We have an undocumented approach”. Or, more specifically, formatting of reports/deliverables came up more than once in this category and the topic mentioned most was synthesis.

The five best practices mentioned in this area are:

- Playbook

- Filing convention

- Peer review for plan/strategy

- Week notes for decision making

- Tombstoning (which we learned did not mean the dangerous sport of jumping into the sea from high cliffs, but instead the rather less life-threatening practice of having a thorough review of a research project when it has ended – sometimes known as ‘post-mortems’).

We closed with some ideas about reducing research debt

When we were preparing for the workshop, Lorna Wall’s tweet led us to Home Office content designer Nadia Huq’s useful checklist Why you should keep records of service decisions. She recommends that you:

- Write down names and job titles of everyone involved in the build, from senior management to developers to contacts at GOV.UK.

- Keep a list of additional functionality or design changes you would have explored if you’d had the time.

- If you can, put together a map of the end to end journey. They’re great for quickly getting a bird’s-eye view of a service.

- If you do a heuristic review of an existing service, include screenshots of it at the time of review. Years down the line, without screenshots, it can be really tricky to work out what you were looking at.

- Save everything in one place, with a clear taxonomy and naming conventions.

I suggested the Research Debt sprint, mainly for teams working in agile 2-week sprints. The idea is to:

- Set aside one sprint each quarter for paying research debt

- Do not do any new research in the sprint

- Organise and clear up the stuff

- Think about the insights and gaps

- Write some reports, publications, blog posts.

And Stephanie suggested the Project Closedown, (also known as Tombstoning or a Post-Mortem), mainly for agencies working on specific engagements:

- Set aside a week of close-down time after each project

- Organise and clear up the stuff

- Do a retro on the project

- What went well

- What went badly

- What we’ll do more of next time

- What we’ll avoid next time

- Write a whitepaper, case study, marketing literature.

Find out more about ResearchOps

If you would like to know more about ResearchOps, then I recommend Emma Boulton’s July 2019 post on Medium: The Eight Pillars of User Research. This is a summary of the work done by the ResearchOps community to define what we mean by ResearchOps, explains how ResarchOps supports user research, and credits key people who have contributed to it.

If you’d like to join the ResearchOps Slack, the main method for the community to discuss and learn, then use this Google form to join the ResearchOps waitlist. Typically, the organisers add people to the community about once a month.

Run this workshop with your team

We had an hour at SDinGOV to explore these ideas, and we’d have loved to have a lot longer. Afterwards, I thought that this might be a workshop that could benefit teams, perhaps for a user-centred design team away day, or a user researchers meet-up. If you would like to use or adapt our slides and this post for your team, please feel welcome to go ahead.

Our only request is that you continue the ‘Creative Commons’ license – credit us, and more importantly, the people whose ideas we drew on for our workshop.

Overall, we learned that avoiding Research Debt is not easy but is worthwhile

Looking back over this lengthy post, we learned that user research in service design produces many things: from the obvious detailed outputs such as consent forms and raw data, through to invaluable “a ha! moments” and building consensus within our teams, across teams, and across organisations.

We also recognised that we are mostly working hard to manage what needs to be managed, and that it’s ok to relax about some things where we can simply let whatever happens happen.

The area that I would have loved to explore more, if time allowed, was the ‘best practice’ ideas. People were able to contribute some intriguing snippets, but I felt that there was a lot of wisdom about best practices in the room that had to remain untapped because we all needed to head for our lunch.

Thanks again to everyone who came – your thoughts and ideas were great.