I had the honour of working with Ginny Redish on this article on readability formulas. Ginny is a leading authority on writing, accessibility, and content design and usability. I’ve lost count of the number of copies of her excellent book, Letting go of the words, that I’ve given away.

Readability formulas: seven reasons to avoid them and what to do instead

If you’ve ever had your computer give you a readability score or a grade level for something you’ve written, you’ve run a readability formula. Readability formulas are easy to use, and give you a number. This combination makes them seductive. But a number isn’t useful if it isn’t reliable, valid, or meaningful.

In this article, we’ll explain how readability formulas work and give you seven reasons why we strongly recommend that you avoid them. We’ll also show you better ways to learn whether the people you want to reach can find, understand, and use your content.

Readability formulas work by counting text features

Readability formulas count just a few features of a text, put the counts into a mathematical formula, and give you a number – usually a school grade or a reading age. The number is meant to predict how easily people with different literacy levels would be able to read the text.

Among the many readability formulas, the most common are the following five:

- Flesch Reading Ease – Counts average sentence length and average syllables per word, gives a number up to 100, and weights long words more than long sentences.

- Flesch-Kincaid – Counts average sentence length and average syllables per word, gives a grade level, and weights long sentences more than long words.

- SMOG Index – Counts the percentage of words with three or more syllables.

- Gunning Fog Index – Counts average sentence length and the percentage of long words.

- Dale-Chall Formula – Counts average sentence length and whether words are on a list of words that were known to some American fourth-graders in 1984.

You can see that most readability formulas focus on long sentences and long words. Indeed, two guidelines for clear writing are to write short sentences and use short words. But making your content useful and usable for the people you want to reach requires much more than observing these two guidelines.

Do not use readability formulas for these 7 reasons

There are seven reasons to avoid relying on readability formulas:

1. Readability formulas are not reliable

Different readability formulas and programs often contradict one another.

Different formulas used on the same text give different results

If readability formulas were reliable, different formulas measuring the same text would all give similar grade levels. But that’s not what happens. For instance, the SMOG Index typically scores at least two grade levels higher than the Dale-Chall formula. The Gunning Fog Index sometimes comes out three grade levels higher than other formulas.

Same formula on the same text in a different program can give different results

In the early days of readability formulas, 40-70 years ago, you would have had to count by hand. Today, of course, if you wanted a readability score, you would rely on a computer program.

But a study by researchers at the University of Michigan found that different computer programs running the same text through the same formula gave different scores. (Shixiang Zhou, Heejin Jeong, and Paul A. Green, How Consistent Are the Best-Known Readability Equations in Estimating the Readability of Design Standards? IEEE Transactions on Professional Communication, March 2017.)

How can that happen? It turns out that the programs vary in how they count specific features of a sentence. Is the date 2019 one syllable or four syllables? Does the program count a semi-colon like a period? Just treating such things differently can change the score your content gets.

If these formulas don’t agree, and the computer programs don’t agree, where is the reliability? Nowhere.

2. Readability scores are not valid

Readability formulas claim to be able to predict who would be able to read the content. To be valid, those claims would have to be true. But let’s consider what readability really means.

Readability isn’t just being able to see letters or words on a page or screen. That’s legibility.

For these formulas to be useful, readability must include understanding. You want people to get meaning from what they can see. The content must be useful to readers – answer their questions, inform them, entertain them, or make them think.

No formula can answer the following critical questions:

- Is the content what your readers want and need?

- Is the key message near the beginning?

- Do meaningful headings help make the content manageable?

- Does the content flow logically from one point to the next?

- Are sections and paragraphs short enough to engage readers?

- Are there lists, tables, pictures, and charts where they can help – especially in content that people would skim and scan?

- Is the page or screen layout inviting and useful?

A poor readability score tells you only that you have some combination of excessively long sentences and too many long words. It does not tell you what else you need to do to improve your content. A good score doesn’t tell you whether your content creates a good conversation. No score can accurately predict whether an actual person would be able to understand your content.

3. Readability formulas don’t consider the meaning of words

You might say, “Okay, but I’m just using the formula to see if my content has long sentences and big words.” But a big word might be one your readers know, while a short word could be one they don’t know. If a formula only counts word length, it doesn’t consider whether your readers are likely to know what the words mean.

For example, compare these two sentences:

I wave my hand.

I waive my rights.

Even though wave is a familiar word for far more readers than waive, most formulas give both sentences exactly the same score.

Only the Dale-Chall Formula, which uses a word list, gives wave a better score than waive. The original Dale-Chall list had words that 80% of fourth-graders in American schools (9 to 10 years old) knew in 1948. Dale compiled a much large, revised list in 1984 – again words that 80% of fourth-graders knew. After Dale’s death, Jeanne Chall published the 1984 version in her 1995 book Readability Revisited: The New Dale-Chall Readability Formula – not long before her own death in 1999. The list reflects an era that had more agriculture than life today might include. For example, we may recognise the word bushel, but how many of us know how many gallons one contains without an Internet search?

However, even the Dale-Chall formula doesn’t distinguish between different meanings of the same word. Enter in a software instruction isn’t the enter that made it onto the Dale-Chall list. Cookie for a website isn’t the same cookie that Dale’s fourth-graders said they knew.

And you can’t just compensate for the formula by declaring that “kids today know these new meanings of the words.” You would need to redo the research to get a modern-day list.

We have better ways than the Dale-Chall Formula to check the vocabulary you are using. We’ll describe them later, when discussing what to do instead of running a readability formula.

4. Grade levels are meaningless for adults

You might be concerned about reaching low-literacy adults and therefore thinking about writing to a specified grade level.

Of course, writing for your readers is essential. But does a readability formula actually help you do that? What does it mean to say that an adult reads at an eighth-grade level or has a reading age of 13?

An eighth-grader – around 13 years old – who reads at that grade level is a fluent reader. An adult whose reading has never progressed beyond age 13 struggles over text, very likely hates reading, and probably avoids reading as much as possible. But that adult has much greater life experience and knows many ideas and words that an eighth-grader would not know.

A True Story From Ginny: I vividly remember testing a revised lease for renting an apartment. Our readers were low-income, low-literacy tenants. Our aim was to make sure they understood what they were signing. Most of our changes worked well. One did not.

We had changed the heading “Security Deposit” to “Promise Money.” All the readability formulas gave our new heading a better score – fewer syllables, more common words.

So we took our before and after versions to a community center where we knew we could easily meet some of the low-income people who needed to understand the lease. By offering to pay them for their time, we got several people to try out the different versions.

Most of the people in this try-out did struggle with reading. They read the words promise money more easily than security deposit. But when they read promise money they were agitated, exclaiming “What’s that?” or “I’ve never heard of that!”

They had to sound out security deposit, but once they had the words, they were much calmer, saying, “Oh, yes. You always have to pay a security deposit.” We must respect the knowledge that our low-literacy adult readers have.

Grade level is a meaningless concept when writing for adults. What we really care about – and what modern literacy assessments look for – is functional literacy: Can adults understand what they are reading so they can do the tasks they need to do to find and keep jobs, take care of themselves and their families, and so on?

5. Readability formulas assume they’re measuring paragraphs of text

All of these formulas are meant to assess continuous paragraphs with full sentences. For example, the SMOG Index requires a minimum of 30 sentences: ten sentences from the start of the text, ten from the middle, and ten from the end.

No formula can grade an e-commerce page that is mostly images and fragments. None can grade a form.

Some websites claim that the FORCAST formula works with forms because it counts only words, not sentences. The FORCAST formula was developed in 1973, to help the US Army to assess written materials to be used by military personnel. It is based solely on the number of single-syllable words in a sample of at least 150 continuous words.

But the FORCAST developers didn’t use forms in their research. They used text passages comprising full sentences, just as the developers of other formulas did. They just found that they didn’t need sentence length as part of their formula. But if your form includes chunks of 150 words, you have a different and simpler problem: far too many words.

(As it happens, a review by the US Army in 1980 Readability Objectives: Research and State-of-the-Art Applied to the Army’s Problems condemned the use of readability formulas for Army purposes using similar discussions toUS cenytre for disease ours here).

Lists are also a challenge for readability formulas. Just ending each item with or without a period could change your content’s score dramatically.

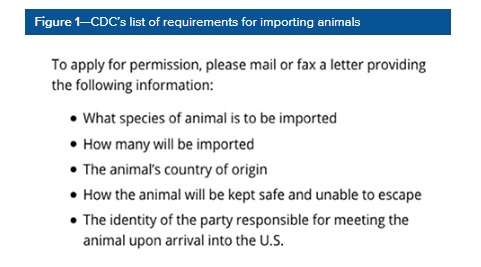

An example: If you were responsible for importing animals for a zoo in the United States, you would need this information:

To apply for permission, please mail or fax a letter providing the following information: what species of animal is to be imported, how many will be imported, the animal’s country of origin, how the animal will be kept safe and unable to escape, and the identity of the party who is responsible for meeting the animal upon arrival into the U.S.

We think you’ll agree that the U.S. Centers for Disease Control and Prevention (CDC) made the right decision when they chose to convey that information as a list, as shown in Figure 1.

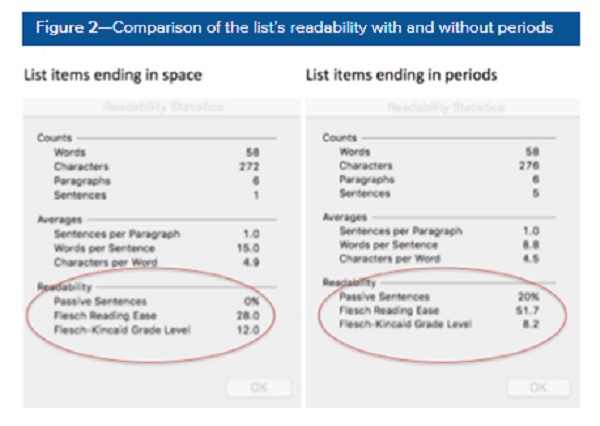

As Figure 2 shows, this text scores poorly with the readability formulas that Microsoft Word uses, giving a Flesh-Kincaid grade level of 12.0. If we put a period at the end of each item, the same text scores very well with the Flesch-Kincaid grade level reported at 8.2. However, we would then be adding periods to pieces of text that are not whole sentences.

6. Revising text to get a better score misses the point

A poor score from a readability formula likely means the content has problems, but it doesn’t tell you what those problems are. Readability formulas are about correlations, not causes.

For example, long sentences often occur in writing that is difficult for some people to read, but that does not mean sentence length is the main or only problem for those people.

You cannot just shorten sentences and shorten words to make your communication succeed. You would be treating symptoms without analyzing what those symptoms mean. You wouldn’t have identified the disease or know how to cure it.

7. Good scores don’t mean that you have useful or usable content

A good readability score doesn’t tell you whether your content works to meet your goals or your readers’ needs. You also need answers to all the questions we listed earlier.

Furthermore, when writers correct just the elements that a formula counts, they may unintentionally introduce other problems for readers. For example, Tom Duffy and Paula Kabance tested four versions of the same content: the original text, a version with shortened sentences, one with simplified words, and one with both shortened sentences and simplified words. Readability scores dropped by as much as six grades from the original text to the version with both changes. But the changes did not result in better comprehension.

A few years later, Leslie Olsen and Rod Johnson analyzed the four texts from the Duffy and Kabance study to see whether they could figure out what had caused those results. They found that the changed versions had lost the cohesion that makes text flow logically and remain meaningful to readers. Changing the elements that the formulas count created other problems that the formulas didn’t see.

When you use a readability formula – especially if you are under pressure to get a particular number or grade level – you must resist the seemingly easy fix of tweaking your text to get a better score. You might make things worse!

The better way is not using readability formulas at all. We now have much better ways to judge how useful and usable our content is. Let’s look at those better ways.

Instead of using readability formulas, use these techniques

Let’s look at how to get a good first draft, then at how to evaluate that draft.

Follow a user-centered process to ensure a readable first draft

We’ll start with four ways to get a really good first draft.

1. Write for the people who read what you write

The definition of plain language has moved away from writing short sentences and using simple words to focusing on the people who read what you write. As Plainlanguage.gov puts it:

Don’t write for an 8th-grade class if your audience is composed of PhD candidates, small business owners, working parents, or immigrants. Only write for 8th graders if your audience is, in fact, an 8th-grade class.

2. Do user research – or create an assumptive persona

Don’t guess about the people you are writing for. Do user research to find out what readers know and don’t know, what words they use for your topic, and how motivated they are to come to and use your content.

However, if you cannot do any user research before you start writing, create a short story about someone who must read what you write. The name for such a story is an assumptive persona. To create an assumptive persona, complete the blanks in these sentences:

Today, ___________ (someone’s name) decided to read ____________ (name of your content item) because ___ (appropriate pronoun) wanted to ____________________________ (reason why the person is reading).

When ___________ (person’s name) comes to my content, ___ (appropriate pronoun) is __________, _________, and ________ (adjectives about that person’s mental state, such as busy, anxious, angry, curious, or tired).

Hint – The reason the person comes to your content is almost never “because I want something to read.”

Assumptive personas help in two ways:

- They give you a way of focusing on your readers by walking your personas through a conversation with your content.

- They help you notice the assumptions you are making about your readers so you can research those assumptions later.

Just be sure you think realistically about who would come to your content. In almost every case, you are not your user!

3. Follow guidelines for clear writing

Short sentences and short words are not the only critical elements of good writing. For example, writing in active voice is also important – although no readability formula counts actives versus passives. (Grammar checkers do, but they’re different from the formulas.)

Your organisation might have its own guidelines for clear writing in a style guide, voice-and-tone guide, or a design system. Also consider using the guidelines at PlainLanguage.gov.

4. Use checkers, but don’t let them change your writing automatically

If you want to be sure that you are using common words, use a vocabulary checker. When writing this column, we searched for vocabulary checker and chose one of the available tools. It helped us decide to change a few complex words in this column. For example, we changed discern to see. Just remember that a vocabulary checker cannot tell you whether you have used a word appropriately.

Many programs for creating content have a spelling checker built in. Some also include a grammar checker. You can also easily find separate spelling and grammar checkers online.

Never let either a spelling checker or grammar checker change your content automatically. Treat both as sources of red flags. See what they say and decide for yourself what to do. The checkers we are familiar with, especially grammar checkers, often indicate something is wrong when it isn’t.

Spelling checkers and grammar checkers can be helpful, but be careful. They can also deceive you, as we are reminded by Mark Eckman and Jerrold Zar’s famous poem Candidate for a Pullet Surprise.

Test your text with people to find out whether it’s readable

We have several ways for potential users to try out our content.

Test content with the people you want to reach

Usability testing is, of course, the best way to know how well your content would work for the people you want to reach. You don’t need many people, a lot of money, or a laboratory. If you know your content exists specifically to help people complete a task, ask them to use it for that task.

For short documents that people might read from beginning to end, such as letters and notices, paraphrase testing is a great technique. For longer documents or any content that you want to ask your participant to read every word of, try a plus-minus technique:

“Participants are asked to read a document and put pluses and minuses in the margin for positive and negative reading experiences. After that, the reasons for the pluses and minuses are explored in an individual interview.” De Jong, M. and P. Schellens (2000). Toward a document evaluation methodology: What does research tell us about the validity and reliability of evaluation methods?

You can choose what positive and negative mean for your document, depending on its purpose. Pete Gale describes using a plus-minus technique for exploring confidence in his blog post: a simple technique for evaluating content.

Get help from a person who isn’t familiar with the content

If you are not allowed to test your content with anyone who is actually a potential reader, the next best option is testing with anyone – anyone at all – who is not familiar with it. Ask the person to read the content out loud to you. Don’t ask them whether they like it. Ask them to tell you what it means. If it is about a task, ask them what they would do next.

You can learn much more about your content by having even one or two people go through it than any readability formula can tell you.

Summary: do not use readability formulas

Readability formulas are neither reliable nor valid. Grade levels are not meaningful for adults. Adults who have trouble reading typically know a lot of words and concepts that a grade-level readability test would assume they don’t know.

A poor readability score doesn’t tell you how to fix your content. Moreover, revising your content to get a better score could make it harder for people to understand the content. A good score doesn’t guarantee success because usability and usefulness rely on many elements the formulas don’t consider.

Writing is a user-centered process, so having users try out your content is a much better way of ensuring success than using a readability formula.

#designtoread