Here’s a question for you: what is your social security number?

If you’re from the US, you probably thought: “Why should I tell you that?” From elsewhere, you probably thought, “I don’t have one of those. Does it matter?”

Either reaction shows that simply asking a question—whether on a website, in a survey, or elsewhere—isn’t enough to guarantee an answer.

To get an answer, I’d have to persuade US readers to trust me; to feel comfortable that I have a legitimate reason for asking and that I’ll look after an important piece of personal data appropriately. And I’d have to persuade readers from elsewhere to trust that the effort of working out how to deal with that question will be repaid. Either way, trust is crucial. If you don’t trust me to make proper use of the data, you won’t want to give me that data, or make the effort of obtaining it for me.

Trust and the reservoir of goodwill

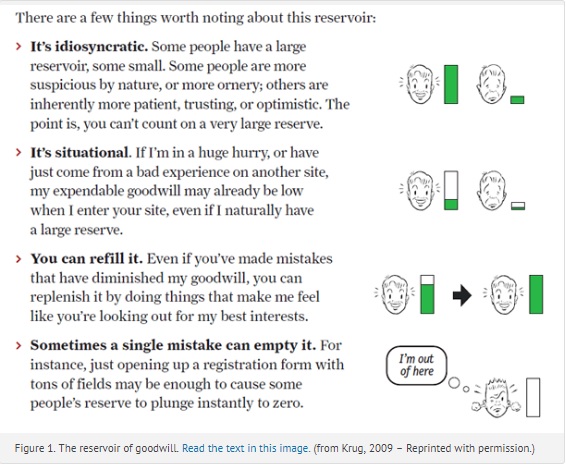

In the second edition of Don’t Make Me Think! A Common Sense Approach to Web Usability, Steve Krug suggests that users come to each page or form with a “reservoir of goodwill (see Figure 1).” Too low a level of goodwill and users give up on their tasks, or, for the more web-savvy, replace their accurate answers with a parallel set of answers that they maintain for this purpose. (The unkind might call this lying.) Either way, the result is poor data quality.

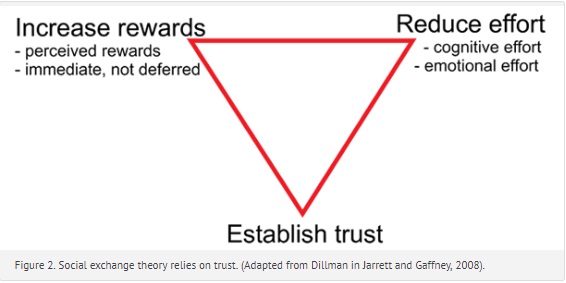

Based on a lifetime of experiments in survey administration and analysis, Don Dillman developed his “social exchange theory” in Internet, Mail, and Mixed-mode Surveys(Dillman et al, 2009). His theory has similarities to Krug’s reservoir of goodwill (see Figure 2).

Dillman points out that higher response rates come from a balance between the user’s perception of the social and financial rewards on the one hand, and the cognitive and emotional effort required on the other, all in the context of trust.

Dillman’s theory is based on extensive research, including one famous experiment that looked at response rates to a paper survey sent out with three different levels of incentive:

- No financial incentive

- Guaranteed $10 offered after return of the completed survey

- A $2 bill enclosed with the mailed paper survey

Although the guaranteed $10 created a small increase in response compared to no incentive, the biggest uplift came from the $2 bill. Dillman explains this in terms of trust. There is a small immediate reward, but what the $2 bill really demonstrates is that the organisation issuing the survey trusts the respondent enough to hand out the reward in advance. And enough respondents feel the urge to reciprocate that trust to make an important contribution to response rates. Contrast that with the $10; even though it’s guaranteed, it requires that the respondent take the first step in demonstrating trust.

Sponsorship and response rates

The typical Dillman survey is a major, lengthy investigation that will inform public policy. Within that context, he recommends that you send a letter ahead of the survey from an appropriate, respected figure of authority to help create trust and lift response rates.

It’s not enough for you, as designer of the survey, to respect the authority—the users have to have the same reaction. In one experiment, (Bhandari et al in the International Journal of Epidemiology, 2003) the letter-in-advance idea didn’t work for orthopaedic surgeons. An appeal from a committee of expert surgeons actually depressed response rates by 19 percent.The authors suggested that super-specialists might be perceived as arrogant by other surgeons. (The ungenerous amongst us might wonder whether some surgeons are sufficiently arrogant to feel annoyed that others are being lauded as experts above themselves.)

Your questions in a credible context

Most of us aren’t doing such substantial surveys. We’re working on little, “What did you think of this website today?” bits of feedback, or on forms that are as short as we can make them and still be consistent with their purpose.

So a whole letter ahead of time? Definitely not. The effort of reading the letter would be disproportionate, increasing the perceived effort without enough corresponding increase in trust to justify it. Instead, the user’s experience of the website previous to the form has to establish your credibility.

In our book Forms that Work, (Jarrett and Gaffney, 2009) we point out that one small thing you can do to build trust is to give the user a form when a form is expected. That is, don’t offer a long list of instructions or hide the form away somewhere. Get right to it.

Without a prior notification or letter, the page of questions must stand alone as trustworthy in its own right, continuing the build-up of trust achieved by the website where it originated. If you hand your users over to a third-party service, such as a payment handler or a market research business for your satisfaction surveys, then you need to think carefully about whether to keep continuous visual branding or to opt for the branding of the third party.

How you use the data

If it looks like you won’t use the data against them, users are more likely to trust you with it. There’s an interesting result from an experiment where pages were branded like a cheap quiz in the typeface Comic Sans (Figure 3), or branded like an authoritative survey from a respected university (Figure 4). Teenagers were more likely to admit to illegal behaviors, such as dope smoking, when answering the cheap quiz.

Are you thinking, “Gullible teens can be trapped by anything?” Well, think again. The researchers achieved similar results with New York Times readers. They responded to a questionnaire branded as a “Test Your Ethics” quiz on a columnist’s blog, and they revealed more unethical behaviours than on the same questionnaire sponsored by a university.

Please don’t think that I advocate hiding your data collection activities behind Comic Sans quizzes. That would verge on “black hat” methods that would be unprofessional (as well as unethical).

Questions influence trust

When I discuss the topic of trust in the context of surveys and forms with web-savvy audiences at conferences, they often claim to scan the questions ahead of time and then make a decision about whether they trust the organisation well enough to fill in the form.

I do not know of an experimental comparison that has investigated similar issues in a controlled context, but I have often observed users in usability tests who, on a multi-page form, opt to “fill in this form with any old thing” to explore the set of questions, with the intention of repeating the process with their real data at some point later. If they encounter questions that they consider inappropriate, they will be gone, never to return.

Preserving trust

So far, we’ve been looking at the way that trust can affect the willingness to answer. If we turn that around, the question-and-answer process can also affect the longer term trust in the brand. Just as a bad experience with a form can empty that reservoir of goodwill, so can a good one refill it.

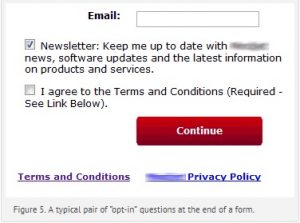

The longer term view leads me to a final thought—the dreaded “opt-in” questions that appear at the end of so many forms, such as those in Figure 5.

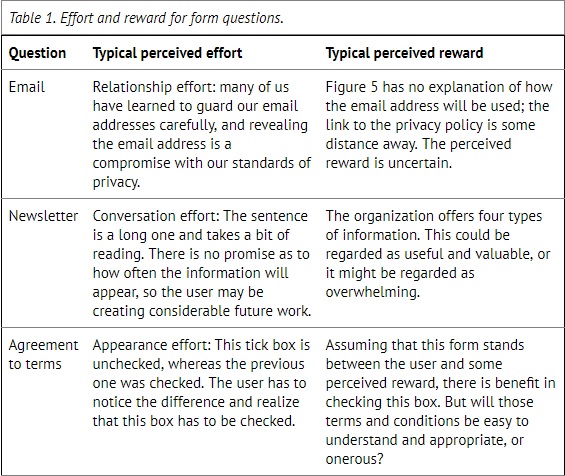

Table 1 summarises the perceived effort and perceived reward from each of those questions. Questions like these are frequent, and you may well argue that they are familiar and that users cope with them perfectly well. (Or you may have been on the receiving end of similar, “They’ll cope, and we need that data,” instructions from clients and colleagues.)

Of course, both of those arguments are correct. Users will mostly cope with this sort of thing. But will they cope gladly? Isn’t there a risk that they’ll be left with a bit of a twinge, some minor disappointment that your organisation or brand has subjected them to the same old unthinking “opt-in” that they’ve suffered so many times before? Won’t that twinge slightly undermine their trust in you? Is the longer-term damage to the brand really justified by the value of the data?

Summary

It’s important to think about trust when asking questions. A question isn’t just a question; it is part of a social exchange, a conversation that takes place within the context of what users expect from organisations and what they hope to achieve with that conversation.

When designing those exchanges, you should aim to:

- Create a trustworthy context. Make it clear who you are and why you are asking the questions.

- Be consistent. Make sure that the questions themselves are consistent with your organisation’s brand, and the purpose for which they are asked.

- Preserve trust for the next time. Avoid ending the question sequence with a series of minor irritations that can damage the perception of your organisation’s brand.

This article first appeared as Jarrett, C. (2012). “Do you trust me enough to answer this question?” Trust and Data Quality. User Experience Magazine, 11(4).

Featured image Trust by purplejavatroll, creative commons

#forms #formsthatwork #surveys #surveysthatwork